A branch of Computer Science named Artificial Intelligence pursues creating the computers or machines as intelligent as human beings.

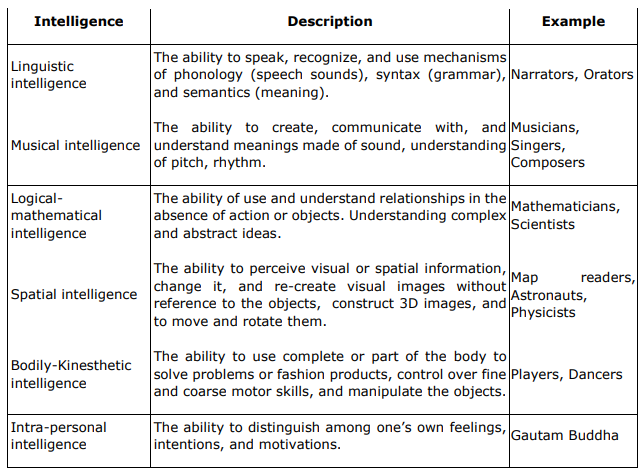

There are three kinds of Intelligence.One can understands things directly for it self,

The other appreciates what others can understand,

The third neither understands by itself nor through others.

This first kind is Excellent second is good, third kind is useless.

What is Artificial Intelligence?

John McCarthy, is known as the father of Artificial Intelligence

According to John McCarthy, it is “The science and engineering of making intelligent machines, especially intelligent computer programs”.

Artificial Intelligence is a way of making a computer, a computer-controlled robot, or a software think intelligently, in the similar manner the intelligent humans think.

AI is accomplished by studying how human brain thinks, and how humans learn, decide, and work while trying to solve a problem, and then using the outcomes of this study as a basis of developing intelligent software and systems.

Goals of AI

To Create Expert Systems: The systems which exhibit intelligent behavior, learn, demonstrate, explain, and advice its users.

To Implement Human Intelligence in Machines: Creating systems that understand, think, learn, and behave like humans.

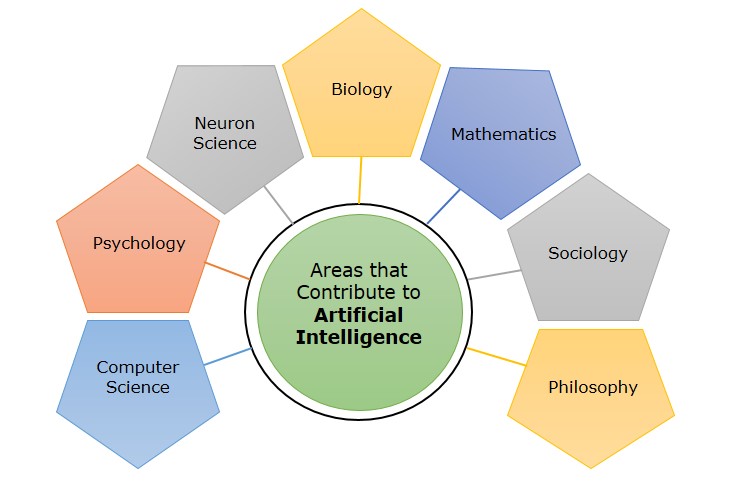

Artificial intelligence is a science and technology based on disciplines such as Computer Science, Biology, Psychology, Linguistics, Mathematics, and Engineering. A major thrust of AI is in the development of computer functions associated with human intelligence, such as reasoning, learning, and problem solving.

Out of the following areas, one or multiple areas can contribute to build an intelligent system.

Components of AI.

Programming Without and With AI

Programming Without AI

1. A computer program without AI can answer the specific questions it is meant to solve.

2. Modification in the program leads to change in its structure.

3. Modification is not quick and easy. It may lead to affecting the program adversely.

Programming With AI

1. A computer program with AI can answer the generic questions it is meant to solve.

2. AI programs can absorb new modifications by putting highly independent pieces of information together. Hence you can modify even a minute piece of information of program without affecting its structure.

3. Quick and Easy program modification.

Applications of AI

AI has been dominant in various fields such as

Gaming: AI plays crucial role in strategic games such as chess, poker, tic-tac-toe, etc., where machine can think of large number of possible positions based on heuristic knowledge.

Natural Language Processing: It is possible to interact with the computer that understands natural language spoken by humans.

Expert Systems: There are some applications which integrate machine, software, and special information to impart reasoning and advising. They provide explanation and advice to the users.

Vision Systems: These systems understand, interpret, and comprehend visual input on the computer. For example,

A spying aero plane takes photographs, which are used to figure out spatial information or map of the areas.

Doctors use clinical expert system to diagnose the patient.

Police use computer software that can recognize the face of criminal with the stored portrait made by forensic artist.

Speech Recognition: Some intelligent systems are capable of hearing and comprehending the language in terms of sentences and their meanings while a human talks to it. It can handle different accents, slang words, noise in the background, change in human’s noise due to cold, etc.

Handwriting Recognition: The handwriting recognition software reads the text written on paper by a pen or on screen by a stylus. It can recognize the shapes of the letters and convert it into editable text.

Intelligent Robots: Robots are able to perform the tasks given by a human. They have sensors to detect physical data from the real world such as light, heat, temperature, movement, sound, bump, and pressure. They have efficient processors, multiple sensors and huge memory, to exhibit intelligence.

In addition, they are capable of learning from their mistakes and they can adapt to the new environment.

HISTORY OF AI

1950: Claude Shannon, “the father of information

theory,” published “Programming a Computer for Playing Chess,” which was the

first article to discuss the development of a chess-playing computer program.

1950: Alan Turing published “Computing Machinery and

Intelligence,” which proposed the idea of The Imitation Game – a question that

considered if machines can think. This proposal later became The Turing Test, which measured machine (artificial) intelligence. Turing’s development

tested a machine’s ability to think as a human would. The Turing Test became an

important component in the philosophy of artificial intelligence, which

discusses intelligence, consciousness, and ability in machines.

1952: Arthur

Samuel, a computer scientist, developed a checkers-playing computer program –

the first to independently learn how to play a game.

1955: John

McCarthy and a team of men created a proposal for a workshop on “artificial

intelligence.” In 1956 when the workshop took place, the official birth of the

word was attributed to McCarthy.

1955: Allen

Newell (researcher), Herbert Simon (economist), and Cliff Shaw (programmer)

co-authored Logic Theorist, the first artificial intelligence computer program.

1958: McCarthy

developed Lisp, the most popular and still favored programming language for

artificial intelligence research.

1959: Samuel

coined the term “machine learning” when speaking about programming a computer

to play a game of chess better than the human who wrote its program.

AI

in the 1960s

Innovation

in the field of artificial intelligence grew rapidly through the 1960s. The

creation of new programming languages, robots and automatons, research studies,

and films that depicted artificially intelligent beings increased in

popularity. This heavily highlighted the importance of AI in the second half of

the 20th century.

1961: Unimate an industrial robot invented by George Devol in the

1950s, became the first to work on a General Motors assembly line in New

Jersey. Its responsibilities included transporting die castings from the

assembly line and welding the parts on to cars – a task deemed dangerous for

humans.

1961: James

Slagle, computer scientist and professor, developed SAINT (Symbolic Automatic

INTegrator), a heuristic problem-solving program whose focus was symbolic

integration in freshman calculus.

1964: Daniel

Bobrow, computer scientist, created STUDENT, an early AI program written in

Lisp that solved algebra word problems. STUDENT is cited as an early milestone

of AI natural language processing.

1965: Joseph

Weizenbaum, computer scientist and professor, developed ELIZA an internet program that could functionally converse

in English with a person. Weizenbaum’s goal was to demonstrate how

communication between an artificially intelligent mind versus a human mind was

“superficial,” but discovered many people attributed anthropomorphic

characteristics to ELIZA.

1966: Shakey the

Robot, developed by Charles Rosen with the help of 11 others, was the first

general-purpose mobile robot, also known as the “first electronic person.”

1968: The sci-fi film 2001:

A Space Odyssey, directed by Stanley Kubrick, is released. It features HAL

(Heuristically programmed ALgorithmic computer), a sentient computer. HAL

controls the spacecraft’s systems and interacts with the ship’s crew,

conversing with them as if HAL were human until a malfunction changes HAL’s

interactions in a negative manner.

1968: Terry Winograd, professor of computer science, created SHRDLU, an early natural language computer program.

AI

in the 1970s:

Like

the 1960s, the 1970s gave way to accelerated advancements, particularly

focusing on robots and automatons. However, artificial intelligence in the

1970s faced challenges, such as reduced government support for AI research.

1970: WABOT-1, the first

anthropomorphic robot, was built in Japan at Waseda University. Its features

included moveable limbs, ability to see, and ability to converse.

1973: James Lighthill, applied mathematician, reported the state of artificial intelligence research to the British Science Council, stating: “in no part of the field have discoveries made so far produced the major impact that was then promised,” which led to significantly reduced support in AI research via the British government.

1977: Director George Lucas’

film Star Wars is released. The film features C-3PO, a

humanoid robot who is designed as a protocol droid and is “fluent in more than

seven million forms of communication.” As a companion to C-3PO, the film also

features R2-D2 – a small, astromech droid who is incapable of human speech (the

inverse of C-3PO); instead, R2-D2 communicates with electronic beeps. Its

functions include small repairs and co-piloting starfighters.

1979: The Stanford Cart, a remote controlled, tv-equipped mobile robot was created by then- mechanical engineering grad student James L. Adams in 1961. In 1979, a “slider,” or mechanical swivel that moved the TV camera from side-to-side, was added by Hans Moravec, then-PhD student. The cart successfully crossed a chair-filled room without human interference in approximately five hours, making it one of the earliest examples of an autonomous vehicle.

AI

in the 1980s

The

rapid growth of artificial intelligence continued through the 1980s. Despite

advancements and excitement behind AI, caution surrounded an inevitable “AI

Winter,” a period of reduced funding and interest in artificial intelligence.

1980: WABOT-2 was built at

Waseda University. This inception of the WABOT allowed the humanoid to

communicate with people as well as read musical scores and play music on an electronic

organ.

1981: The Japanese Ministry of International Trade and Industry allocated $850 million to the Fifth Generation Computer project, whose goal was to develop computers that could converse, translate languages, interpret pictures, and express humanlike reasoning.

1984: The film Electric Dreams, directed by Steve Barron, is released. The plot revolves around a love triangle between a man, woman, and a sentient personal computer called “Edgar.”

1984: At the

Association for the Advancement of Artificial Intelligence (AAAI), Roger Schank

(AI theorist) and Marvin Minsky (cognitive scientist) warn of the AI winter,

the first instance where interest and funding for artificial intelligence

research would decrease. Their warning came true within three years’ time.

1986: Mercedes-Benz

built and released a driverless van equipped with cameras and sensors under the

direction of Ernst Dickmanns. It was able to drive up to 55 mph on a road with

no other obstacles nor human drivers.

1988: Computer

scientist and philosopher Judea Pearl published “Probabilistic Reasoning in

Intelligent Systems.” Pearl is also credited with inventing Bayesian networks,

a “probabilistic graphical model” that represents sets of variables and their

dependencies via directed acyclic graph (DAG).

AI in the 1990s

The end of the millennium was on the horizon, but this

anticipation only helped artificial intelligence in its continued stages of

growth.

1995: Computer

scientist Richard Wallace developed the chatbot A.L.I.C.E (Artificial

Linguistic Internet Computer Entity), inspired by Weizenbaum's ELIZA. What

differentiated A.L.I.C.E. from ELIZA was the addition of natural language

sample data collection.

1997: Computer scientists

Sepp Hochreiter and Jürgen Schmidhuber developed Long Short-Term Memory (LSTM),

a type of a recurrent neural network (RNN) architecture used for handwriting

and speech recognition.

1997: Deep Blue, a chess-playing computer developed by IBM became the first system to win a chess game and match against a reigning world champion.

1997: Deep Blue, a chess-playing computer developed by IBM became the first system to win a chess game and match against a reigning world champion.

1998: Dave Hampton and Caleb

Chung invented Furby, the first “pet” toy robot for children.

1999: In line with Furby,

Sony introduced AIBO (Artificial Intelligence RoBOt), a $2,000 robotic pet dog

crafted to “learn” by interacting with its environment, owners, and other

AIBOs. Its features included the ability to understand and respond to 100+

voice commands and communicate with its human owner.

AI from 2000-2010

The new millennium was underway – and after the fears of Y2K

died down – AI continued trending upward. As expected, more artificially

intelligent beings were created as well as creative media (film, specifically)

about the concept of artificial intelligence and where it might be headed.

2000: The Y2K

problem, also known as the year 2000 problem, was a class of computer bugs

related to the formatting and storage of electronic calendar data beginning on

01/01/2000. Given that all internet software and programs had been created in

the 1900s, some systems would have trouble adapting to the new year format of

2000 (and beyond). Previously, these automated systems only had to change the

final two digits of the year; now, all four digits had to be switched over – a

challenge for technology and those who used it.

2000: Professor Cynthia

Breazeal developed Kismet, a robot that could recognize and simulate emotions

with its face. It was structured like a human face with eyes, lips, eyelids,

and eyebrows.

2000: Honda releases ASIMO,

an artificially intelligent humanoid robot.

2001: Sci-fi film A.I.

Artificial Intelligence, directed by Steven Spielberg, is released. The

movie is set in a futuristic, dystopian society and follows David, an advanced

humanoid child that is programmed with anthropomorphic feelings, including the

ability to love.

2002: i-Robot released

Roomba, an autonomous robot vacuum that cleans while avoiding obstacles.

2004: NASA's robotic exploration

rovers Spirit and opportunity to navigate Mars’ surface

without human intervention.

2004: Sci-fi film I,

Robot, directed by Alex Proyas, is released. Set in the year 2035, humanoid

robots serve humankind while one individual is vehemently anti-robot, given the

outcome of a personal tragedy (determined by a robot.)

2006: Oren

Etzioni (computer science professor), Michele Banko, and Michael Cafarella

(computer scientists), coined the term “machine reading,” defining it as

unsupervised autonomous understanding of text.

2007: Computer

science professor Fei Fei Li and colleagues assembled ImageNet, a database of

annotated images whose purpose is to aid in object recognition software

research.

2009: Google secretly developed a driverless car. By 2014, it passed Nevada’s self-driving test.

2009: Google secretly developed a driverless car. By 2014, it passed Nevada’s self-driving test.

AI 2010 to present day

The current decade has been immensely important for AI

innovation. From 2010 onward, artificial intelligence has become embedded in

our day-to-day existence. We use smartphones that have voice assistants and

computers that have “intelligence” functions most of us take for granted. AI is

no longer a pipe dream and hasn’t been for some time.

2010: ImageNet

launched the ImageNet Large Scale Visual Recognition Challenge (ILSVRC), their

annual AI object recognition competition.

2010: Microsoft launched

Kinect for Xbox 360, the first gaming device that tracked human body movement

using a 3D camera and infrared detection.

2011: Watson, a natural

language question answering computer created by IBM, defeated two former Jeopardy! champions,

Ken Jennings and Brad Rutter, in a televised game.

2011: Apple released Siri, a virtual assistant on Apple iOS operating systems. Siri uses a natural-language user interface to infer, observe, answer, and recommend things to its human user. It adapts to voice commands and projects an “individualized experience” per user.

2012: Jeff Dean and Andrew Ng (Google researchers) trained a large neural network of 16,000 processors to recognize images of cats (despite giving no background information) by showing it 10 million unlabeled images from YouTube videos.

2013: A research team from

Carnegie Mellon University released Never Ending Image Learner (NEIL), a

semantic machine learning system that could compare and analyze image

relationships.

2014: Microsoft released

Cortana, their version of a virtual assistant similar to Siri on iOS.

2014: Amazon created Amazon

Alexa, a home assistant that developed into smart speakers that function as

personal assistants.

2015: Elon Musk, Stephen

Hawking, and Steve Wozniak among 3,000 others signed an open letter banning the

development and use of autonomous weapons (for purposes of war.)

2015-2017: Google Deep Mind’s

Alpha Go, a computer program that plays the board game Go, defeated

various (human) champions.

2016: A Humanoid robot named Sophia is created by Hanson Robotics. She is known as the first “robot citizen.” What distinguishes Sophia from previous humanoids is her likeness to an actual human being, with her ability to see (Image recognisation), make facial expressions, and communicate through AI.

2016: Google

released Google Home, a smart speaker that uses AI to act as a “personal

assistant” to help users remember tasks, create appointments, and search for

information by voice.

2017: The

Facebook Artificial Intelligence Research lab trained two “dialog agents”

(chatbots) to communicate with each other in order to learn how to negotiate.

However, as the chatbots conversed, they diverged from human language

(programmed in English) and invented their own language to communicate with one

another – exhibiting artificial intelligence to a great degree.

2018: Alibaba

(Chinese tech group) language processing AI outscored human intellect at a

Stanford reading and comprehension test. The Alibaba language processing scored

“82.44 against 82.30 on a set of 100,000 questions” – a narrow defeat, but a

defeat nonetheless.

2018: Google

developed BERT, the first “bidirectional, unsupervised language representation

that can be used on a variety of natural language tasks using transfer

learning.”

2018: Samsung introduced

Bixby, a virtual assistant. Bixby’s functions include Voice, where

the user can speak to and ask questions, recommendations, and

suggestions; Vision, where Bixby’s “seeing” ability is built into

the camera app and can see what the user sees (i.e. object identification,

search, purchase, translation, landmark recognition); and Home,

where Bixby uses app-based information to help utilize and interact with the

user (e.g. weather and fitness applications.)

What to expect for AI in 2019 and beyond?

Artificial intelligence advancements are occurring at an

unprecedented rate. That being said, we can expect that the trends from the

past decade will continue swinging upward in the coming year. A few things to

keep our eyes on in 2019 include:

Chatbots+

virtual assistants: Strengthened chatbot and virtual assistant

automation for heightened user experience

Natural Language Processing(NLP): Increased NLP

abilities for artificially intelligent apps, including (and especially for)

chatbots and virtual assistants.

Machine Learning and Automated

Machine Learning: ML will shift toward Auto ML algorithms

to allow developers and programmers to solve problems without creating specific

models

Autonomous vehicles: Despite

some bad press surrounding various faulty self-driving vehicles, it’s safe to

assume there will be a stronger push to automate the process of driving product

from point A to point B to 1. Save on the cost of human labor, 2. Optimize the

process of purchase-shipment-arrival to consumer via self-driving vehicles that

– in essence – won’t get tired behind the wheel

Our goal? Keeping pace with advancements in AI

In order to keep up with the world of tech, we have to keep

pace with innovations in artificial intelligence. From humanoid robots like

Sophia to home speaker assistants like Alexa, AI is advancing at an accelerated

rate. Someday, humans will have artificially intelligent companions beyond toys

like AIBO or Furby; someday, AI and humankind might coexist in a fashion where

humans and humanoids are indistinguishable from one another.

Artificial Intelligence is an umbrella that

contains other realms like image processing, cognitive science, neural networks.

The word Artificial Intelligence comprises of two words “Artificial” and

“Intelligence”. Artificial refers to something which is made by human or non

natural thing and Intelligence means ability to understand or think. There is a

misconception that Artificial Intelligence is a system, but it is not a system

.AI is implemented in the system.

Definition: AI, one definition can be “It is the study of how to

train the computers so that computers can do things which at present human can

do better. Therefore It is a intelligence where we want to

add all the capabilities to machine that human contain.

Task Domains of Artificial Intelligence:

Task Domains of Artificial Intelligence:

1.2 Intelligent

Agent:

An agent is anything that can perceive its environment

through sensors and acts upon that Environment through effectors.

·

A human agent has sensory organs such as eyes,

ears, nose, tongue and skin parallel to the sensors, and other organs such as

hands, legs, mouth, for Effectors.

·

A robotic agent replaces cameras and infrared range

finders for the sensors, and various motors and actuators for effectors.

·

A software agent has encoded bit strings as its

programs and actions.

Agent

Terminology

·

Performance Measure of Agent −

It is the criteria, which determines how successful an agent is.

·

Behaviour of Agent −

It is the action that agent performs after any given sequence of percepts.

·

Percept − It is agent’s

perceptual inputs at a given instance.

·

Percept Sequence −

It is the history of all an agent has perceived till date.

Fig: Agent and Environment

Fig: Agent and Environment

Rationality:

Rationality is the status of being reasonable, sensible, and having good sense of judgment.

Rationality is concerned with expected actions and results depending upon what the agent has perceived. Performing actions with the aim of obtaining useful information is an important part of rationality.

Rationality of an agent depends on the following −

- The performance measures, which determine the degree of success.

- Agent’s Percept Sequence till now.

- The agent’s prior knowledge about the environment.

- The actions that the agent can carry out.

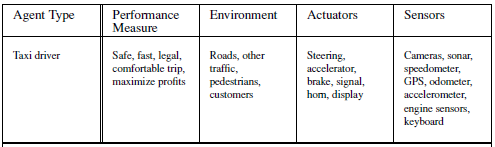

- Specifying the task environment:

- PEAS(Performance, Environment, Actuators, And Sensors)

Types of Intelligent Agents:

The Structure

of Intelligent Agents

Agent’s structure can be

viewed as

- Agent =

Architecture + Agent Program

- Architecture =

the machinery that an agent executes on.

- Agent Program

= an implementation of an agent function.

Simple Reflex Agents

The simplest kind of agent is the simple SIMPLE REFLEX reflex agent.

These agents select actions on the basis of the current percept, ignoring the rest of the percept history.

FIG: Simple Reflex Agent

Simple reflex agents have the admirable property of being simple, but they turn out to be

of limited intelligence.

The simplest kind of agent is the simple SIMPLE REFLEX reflex agent.

These agents select actions on the basis of the current percept, ignoring the rest of the percept history.

FIG: Simple Reflex Agent

Simple reflex agents have the admirable property of being simple, but they turn out to be

of limited intelligence.

- They choose

actions only based on the current percept.

- They are

rational only if a correct decision is made only on the basis of current

precept.

- Their

environment is completely observable.

Condition-Action Rule − It is a rule that

maps a state (condition) to an action.

function SIMPLE-REFLEX-AGENT (percept ) returns an action

persistent: rules, a set of condition–action rules

state←INTERPRET-INPUT(percept )

rule←RULE-MATCH(state, rules)

action ←rule.ACTION

return action.

A simple reflex agent. It acts according to a rule whose condition matches

the current state, as defined by the percept.

function SIMPLE-REFLEX-AGENT (percept ) returns an action

persistent: rules, a set of condition–action rules

state←INTERPRET-INPUT(percept )

rule←RULE-MATCH(state, rules)

action ←rule.ACTION

return action.

A simple reflex agent. It acts according to a rule whose condition matches

the current state, as defined by the percept.

Model Based

Reflex Agents

They use a model of the world to choose their actions. They

maintain an internal state.

Model − knowledge about “how the things happen in the world”.

Internal State − It is a representation of unobserved

aspects of current state depending on percept history.

A Model Based Reflex Agents:

Fig: A model-based reflex agent. It keeps track of the current state of the world,

using an internal model. It then chooses an action in the same way as the reflex agent.

A Model Based Reflex Agents:

Fig: A model-based reflex agent. It keeps track of the current state of the world,

using an internal model. It then chooses an action in the same way as the reflex agent.

Updating

the state requires the information about −

- How the world

evolves.

- How the agent’s

actions affect the world.

Goal Based Agents

They choose their actions in order to achieve goals. Goal-based

approach is more flexible than reflex agent since the knowledge supporting a

decision is explicitly modeled, thereby allowing for modifications.

Sometimes goal-based action selection is straightforward—for example, when goal satisfaction

results immediately from a single action. Sometimes it will be more tricky—for

example, when the agent has to consider long sequences of twists and turns in order to find a

way to achieve the goal.

goal-based action selection is straightforward,

Goal − It is the description of desirable situations.

Sometimes goal-based action selection is straightforward—for example, when goal satisfaction

results immediately from a single action. Sometimes it will be more tricky—for

example, when the agent has to consider long sequences of twists and turns in order to find a

way to achieve the goal.

goal-based action selection is straightforward,

Goal − It is the description of desirable situations.

Goal − It is the

description of desirable situations.

Utility Based Agents

Goals alone are not enough to generate high-quality behavior in most environments.

They choose actions

based on a preference (utility) for each state.

Goals are inadequate

when −

·

There are conflicting goals, out of which only few can be

achieved.

·

Goals have some uncertainty of being achieved and you need to

weigh likelihood of success against the importance of a goal.

A model-based, utility-based agent. It uses a model of the world, along with

a utility function that measures its preferences among states of the world. Then it chooses the

action that leads to the best expected utility, where expected utility is computed by averaging

over all possible outcome states, weighted by the probability of the outcome.

Learning Agents:

A learning agent can be divided into four conceptual components

The most important distinction is between LEARNING ELEMENT the learning element, which is responsible for making improvements, and the performance element, which is responsible for selecting external actions. The performance element is what we have previously considered to be the entire agent: it takes in percepts and decides on actions. The design of the learning element depends very much on the design of the performance

element.The critic tells the learning element how well the agent is doing with respect to a fixed

performance standard. The critic is necessary because the percepts themselves provide no

indication of the agent’s success.

A General Learning Agent

a utility function that measures its preferences among states of the world. Then it chooses the

action that leads to the best expected utility, where expected utility is computed by averaging

over all possible outcome states, weighted by the probability of the outcome.

Learning Agents:

A learning agent can be divided into four conceptual components

The most important distinction is between LEARNING ELEMENT the learning element, which is responsible for making improvements, and the performance element, which is responsible for selecting external actions. The performance element is what we have previously considered to be the entire agent: it takes in percepts and decides on actions. The design of the learning element depends very much on the design of the performance

element.The critic tells the learning element how well the agent is doing with respect to a fixed

performance standard. The critic is necessary because the percepts themselves provide no

indication of the agent’s success.

A General Learning Agent

The Nature of

Environments

Some programs operate in

the entirely artificial environment confined to keyboard

input, database, computer file systems and character output on a screen.

In contrast, some

software agents (software robots or soft bots) exist in rich, unlimited soft bots

domains. The simulator has a very detailed, complex environment.

The software agent needs to choose from a long array of actions in real time. A

soft bot designed to scan the online preferences of the customer and show

interesting items to the customer works in the real as well as

an artificial environment.

The most famous artificial

environment is the Turing Test environment, in which one

real and other artificial agents are tested on equal ground. This is a very

challenging environment as it is highly difficult for a software agent to

perform as well as a human.

Turing Test

The success of an

intelligent behavior of a system can be measured with Turing Test.

Two persons and a

machine to be evaluated participate in the test. Out of the two persons, one

plays the role of the tester. Each of them sits in different rooms. The tester

is unaware of who is machine and who is a human. He interrogates the questions

by typing and sending them to both intelligence, to which he receives typed

responses.

This test aims at

fooling the tester. If the tester fails to determine machine’s response from

the human response, then the machine is said to be intelligent.

Properties of

Environment

The environment has

multifold properties −

· Discrete / Continuous −

If there are a limited number of distinct, clearly defined, states of the

environment, the environment is discrete (For example, chess); otherwise it is

continuous (For example, driving).

· Observable / Partially Observable −

If it is possible to determine the complete state of the environment at each

time point from the percepts it is observable; otherwise it is only partially

observable.

· Static / Dynamic −

If the environment does not change while an agent is acting, then it is static;

otherwise it is dynamic.

· Single agent / Multiple agents −

The environment may contain other agents which may be of the same or different

kind as that of the agent.

· Accessible / Inaccessible −

If the agent’s sensory apparatus can have access to the complete state of the

environment, then the environment is accessible to that agent.

·

Deterministic / Non-deterministic −

If the next state of the environment is completely determined by the current

state and the actions of the agent, then the environment is deterministic;

otherwise it is non-deterministic.

·

Episodic / Non-episodic −

In an episodic environment, each episode consists of the agent perceiving and

then acting. The quality of its action depends just on the episode itself.

Subsequent episodes do not depend on the actions in the previous episodes.

Episodic environments are much simpler because the agent does not need to think

ahead.

Problem Space and Search:

A problem can be defined formally by Five components:

1.The initial state 2. Formulating problems 3.Actions 4. Transition Models 5. Goal State

function SIMPLE-PROBLEM-SOLVING-AGENT(percept ) returns an action

persistent: seq, an action sequence, initially empty

state, some description of the current world state

goal , a goal, initially null

problem, a problem formulation

state←UPDATE-STATE(state, percept )

if seq is empty then

goal ←FORMULATE-GOAL(state)

problem ←FORMULATE-PROBLEM(state, goal )

seq ←SEARCH(problem)

if seq = failure then return a null action

action ←FIRST(seq)

seq ←REST(seq)

return action

A simple problem-solving agent. It first formulates a goal and a problem,

searches for a sequence of actions that would solve the problem, and then executes the actions

one at a time. When this is complete, it formulates another goal and starts over.

Measuring problem-solving performance:

We can evaluate an algorithm’s performance in four ways:

Completeness: Is the algorithm guaranteed to find a solution when there is one?

Optimality : The strategy to find the optimal solution

Time complexity: How long does it take to find a solution?

Space complexity: How much memory is needed to perform the search?

The Factors that need to be defined for formulation of a problem.

Initial State : The state in which the agent starts or initial condition of the agent.

States : All states that are reachable from initial state by any sequence of actions or all possible states that the agent can take. This is also referred to as State space.

Actions : All possible actions that the agent can execute. Specifically, it provides the list of actions, that an agent can perform in a particular state. This is also referred to as Action space.

Transition Model : This property describes the results of each action taken in a particular state.

Goal Test : A way to check, whether a state is the goal.

Path Cost : A function that assigns a numeric cost to a path w.r.t. performance measure.

Problem Space and Search:

A problem can be defined formally by Five components:

1.The initial state 2. Formulating problems 3.Actions 4. Transition Models 5. Goal State

function SIMPLE-PROBLEM-SOLVING-AGENT(percept ) returns an action

persistent: seq, an action sequence, initially empty

state, some description of the current world state

goal , a goal, initially null

problem, a problem formulation

state←UPDATE-STATE(state, percept )

if seq is empty then

goal ←FORMULATE-GOAL(state)

problem ←FORMULATE-PROBLEM(state, goal )

seq ←SEARCH(problem)

if seq = failure then return a null action

action ←FIRST(seq)

seq ←REST(seq)

return action

A simple problem-solving agent. It first formulates a goal and a problem,

searches for a sequence of actions that would solve the problem, and then executes the actions

one at a time. When this is complete, it formulates another goal and starts over.

Measuring problem-solving performance:

We can evaluate an algorithm’s performance in four ways:

Completeness: Is the algorithm guaranteed to find a solution when there is one?

Optimality : The strategy to find the optimal solution

Time complexity: How long does it take to find a solution?

Space complexity: How much memory is needed to perform the search?

The Factors that need to be defined for formulation of a problem.

Initial State : The state in which the agent starts or initial condition of the agent.

States : All states that are reachable from initial state by any sequence of actions or all possible states that the agent can take. This is also referred to as State space.

Actions : All possible actions that the agent can execute. Specifically, it provides the list of actions, that an agent can perform in a particular state. This is also referred to as Action space.

Transition Model : This property describes the results of each action taken in a particular state.

Goal Test : A way to check, whether a state is the goal.

Path Cost : A function that assigns a numeric cost to a path w.r.t. performance measure.

Types of Search : There are two types of searches

1.Uninformed Search , 2. Informed Search.

1.Uninformed Search , 2. Informed Search.

Comments

Post a Comment